The Rise and Fall of "Vibe Coding"

TLDR: It's just called "coding" now.

Remember the word "mashup"?¶

Sometimes it's hard for me to believe something happened, even if I was there.

But I clearly remember a moment from about twenty years ago when we lacked a word for a new kind of web application – a sort of Frankenstein's app, stitched together from live data streams, RSS, scraped content, and remote APIs. This was a pretty big deal at the time. You didn't need to think about servers once Slicehost and EC2 hit the scene. Sendgrid meant skipping all the SMTP drudgery, and let's just say I remember the trauma of building an international mobile app before Twilio.

All of a sudden we were entering a world in which one engineer could achieve enormous leverage. We'd go to a hackathon and build something in a weekend that would previously take months or even years, and yet, it didn't really feel like you'd done anything.

You'd hear things like The SMS just hit my phone in one line of code. It took me two weeks last time... and We didn't think we could get this check-in app off the ground, because we'd need a few years to build up our venue data. Then we realized Yahoo Maps had everything we needed and you'd know that something was changing.

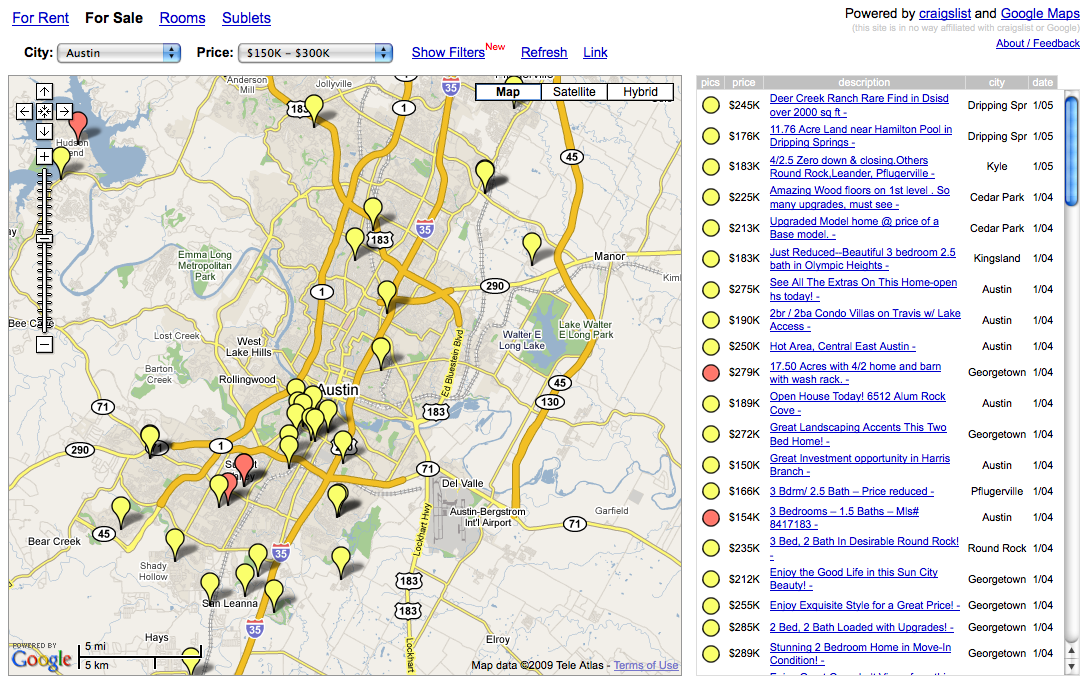

Compared to what came before, this kind of app really was different, and when something is really different, it needs a different word. Someone started calling them mashups, and pretty soon we all did. The first real mashup I saw was a mashup of Craigslist and Google Maps called HousingMaps. One (admittedly brilliant) guy made it by himself, and it wasn't pretty, but within a month, everyone I knew was using it to visually chart the "for-rent" section of Craigslist, mostly just to stay close to Dolores Park. Mashups were all the rage! An entire newspaper was even named after this trend.

But now, in 2025? I haven't heard the word "mashup" in software in at least ten years. That's because today, this is simply how software is built. The exception became the rule, and the word evaporated, just like NoSQL and Cloud Computing.

And within a year or two, I think vibe coding will be on that list.

What's different now?¶

I don't think it's a particularly novel observation to point out that tech terminology (or really, any terminology) follows this sort of boom-and-bust cycle. If a word stays in use long enough, it at least lives to have its meaning irreversibly altered (my fav example is napron). And in some cases, the primary connotation of a word can morph into its exact denotative opposite, such as sanction, oversight, handicap, early stage investing, and, most annoyingly, literally.

But I digress. The point is that even in the stablest of times, language evolves, meanings change, and whole words can come and go. The cycle goes like this: A word is born, its usage begins to proliferate through the social graph and the geo graph. And then much later, as its meaning changes, those deltas form a second and third and fourth wave across the graph. Sometimes that secondary wave is interrupted, uneven, or simply too slow, and we get a real divergence. With a frequency of decades and centuries, this is what leads to new dialects and even new languages (and TERF wars... JK).

What's possibly unique to software, though, is the speed at which this process happens, compressing the cycles.

What's unique to AI Software is that, I believe, this speed has crossed a distinct threshold: there is a difference in degree so substantial that it becomes a difference in kind. Cycle times are compressing from decades to months, and in some cases to weeks. By the time a concept reaches awareness even amongst practitioners, the bleeding edge has redefined it. Terms and facts become outdated before they're fully adopted even by other experts. We're experiencing a kind of linguistic turbulence where words can't settle into meaning before being swept away.

The right side of history¶

In AI tech in June 2025, word is spreading of the limitations of (non-graph) RAG, even as those limitations are largely being erased. And many engineers are so attached to their priors that they're about to waste six months on Neo4j. (But seriously, who hasn't at this point?) And yet while they were planning that project, LLM context windows doubled and doubled again, embedding models improved, embedding techniques improved, and elite long-vector search tech became accessible and affordable even to novices. Linear reasoning made significant strides in the two weeks since Apple Research published their earth-shattering paper about last gen's models. Of course the paper made its rounds, but OpenAI, Anthropic, and Google can already do some of the things this paper poo-poos (As an aside, I've met full-fledged humans who cannot reason linearly, and one even managed to get a Harvard MBA.). Point is that in production AI software, at least, the smart money is betting on the models. In the past month alone:

- Cursor 1.0 released

- Claude Opus 4 launched

- Claude Code went GA and got built into JetBrains

- Turbopuffer went GA

- Veo 3 launched

- OpenAI o3 Pro launched

- o3 price reduced by 80%

- Google surpassed all of them, arguably

It would be insanity to be pessimistic about the improvements we're yet to see. And I guess what I'm trying to say is this: what's true in production is true in productivity. If you played around with Cursor and Sonnet 3.5 a few months ago and found it lacking, join the crowd – but don't get attached to your conclusions. If you haven't spent even half a day with Cursor 1.0 and Claude Code with Opus 4, your every take is unserious. Don't you dare dismiss "vibe coders" as being the ones making assumptions, because they're getting up-to-date knowledge every fucking day... and every day, your own knowledge becomes noticeably more antiquated.

A few weeks back, Jj was publishing The Copilot Delusion on the exact same day it was being rendered out-of-touch by the launches of Opus 4, Sonnet 4, and Claude Code. Y'all are out here repeating memes like "Vibe coding is cute until someone has to maintain it" or "Seems okay for frontend code, but not hard stuff like I do" (don't get me started on the assumptions in that statement). These viral takes are somehow both new (relatively) and tired (to those of us who are actually getting our hands dirty).

If you read the above article, now check this one out: My AI Skeptic Friends Are All Nuts. Which viewpoint do you really think is going to age better?

Kyle Wild

Berkeley, CA

June 14, 2025